Imagine Constructing a Digital Brain

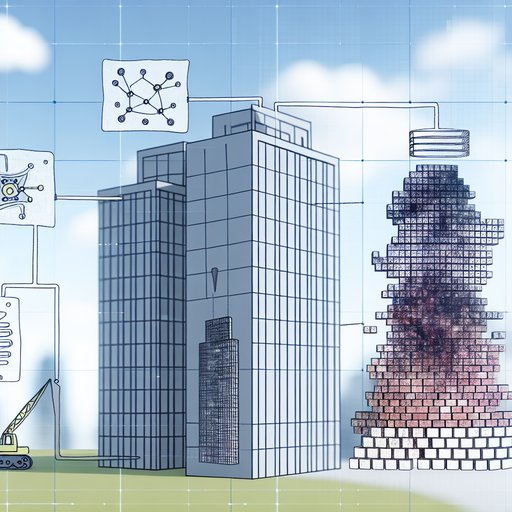

Imagine constructing a digital brain, a sprawling network of code that can write poetry, solve math problems, or banter about the latest memes. This is the ambitious task of creating a large language model (LLM), the technology powering tools like ChatGPT, Gemini, or Grok. From the 1960s, when early AI struggled to parse simple sentences, to today’s models crafting essays, the leap is staggering. LLMs blend vast datasets, clever algorithms, and raw computational power to mimic human-like understanding. But how are these giants built? From collecting raw data to deploying them in sleek data centers, the process is a fascinating mix of science, art, and ethics. Let’s journey through the stages of crafting an LLM, designed to captivate anyone with a technical curiosity.

Gathering the Raw Material: Data Collection

Every LLM begins with data—mountains of it. Think of data as the clay for sculpting a masterpiece. To train a model that can discuss history, code Python, or explain quantum mechanics, engineers need a massive, diverse dataset. This includes web pages (via projects like Common Crawl), digitized books, academic papers, and even social media posts. For example, The Pile, an 825GB dataset, mixes Wikipedia, GitHub code, and PubMed articles to capture a broad slice of human knowledge.

Quantity isn’t enough—quality and diversity are critical. If the data skews toward one perspective, like Western news, the model risks inheriting biases, misunderstanding cultures, or parroting outdated views. Engineers use tools like deduplication algorithms to filter noise—spam, ads, or gibberish—ensuring the dataset is clean and representative. This is no small feat: web data is a chaotic stew of blog posts, comments, and clickbait, requiring sophisticated curation.

Ethical challenges loom large. Who owns the data? Were users’ posts scraped with consent? In 2024, authors sued AI firms, alleging their novels were used in training without permission, sparking debates over copyright and “fair use.” Privacy concerns also arise, with critics demanding opt-in policies for data use. Despite these hurdles, curated datasets form the foundation of every LLM, setting the stage for turning raw text into intelligence.

The Recipe: Algorithms and Model Architecture

If data is the clay, algorithms are the sculptor’s tools. At the core of modern LLMs lies the transformer architecture, a 2017 breakthrough that revolutionized AI. Transformers are like a super-smart librarian, instantly finding connections between words in a sentence, no matter how far apart. This power comes from attention, a mechanism that acts like a spotlight, illuminating key words to grasp context. For example, in “The cat, which slept all day, ate,” attention links “cat” to “ate,” ignoring distractions.

Picture a transformer as a layered cake. Each layer processes text, learning patterns like grammar, context, or humor. A model might have dozens of layers and billions of parameters—tunable knobs storing what it’s learned. GPT-3, with 175 billion parameters, dwarfs earlier AI with mere millions. Training involves feeding text and adjusting parameters to predict the next word, using algorithms like gradient descent to minimize errors. It’s a balancing act: too little training, and the model is clueless; too much, and it overfits, memorizing data instead of generalizing.

New trends are emerging. Efficient architectures, like mixture-of-experts models, activate only parts of the model, slashing compute costs. These innovations hint at leaner, faster LLMs. Still, the transformer remains king, its attention mechanism a culinary art, blending flavors of language into coherent text. The result is a model ready for the next challenge: powering it up.

Powering the Beast: Compute Requirements

Training an LLM is like running a digital marathon, demanding immense computational muscle. Modern models require thousands of specialized chips—GPUs or TPUs—working in concert. A single training run for a model like GPT-3 might take weeks and consume enough energy to power a small town, emitting ~600 tons of CO2, equivalent to 50 homes’ annual energy use.

Why so much power? Training involves processing billions of words, performing trillions of calculations to tweak parameters. This happens across server clusters, with data split for parallel processing. Innovations like distributed computing and mixed-precision training optimize speed, but costs remain high—training can run millions of dollars. Hardware pushes boundaries, too. Cerebras’ Wafer-Scale Engine, a chip the size of a pizza, crams unprecedented power into one unit, slashing training time.

Sustainability is a growing concern. Companies like Google and xAI now power some data centers with solar and wind to curb emissions. Software frameworks, like PyTorch, orchestrate training, while cooling systems prevent overheating. The compute demands are colossal, but they birth a digital giant, ready for refinement.

Teaching Manners: Alignment and Fine-Tuning

A freshly trained LLM is like a brilliant but unruly child. It can generate text but might spout nonsense, biases, or harmful content. Alignment and fine-tuning shape it into a helpful, safe companion. Alignment ensures outputs match human values, like truthfulness and respect. One method, reinforcement learning from human feedback (RLHF), involves annotators rating responses, teaching the model to prioritize clear, appropriate answers. For example, if it responds sarcastically to a factual query, annotators downvote it, nudging it toward clarity.

Fine-tuning refines the model for tasks like answering questions or writing code. This uses curated datasets, often smaller but targeted. Grok, for instance, was fine-tuned to adopt a playful yet professional tone, making it ideal for tech Q&A. Continuous fine-tuning post-launch helps models adapt to new slang or user feedback, like 2025’s “vibe check” trend.

Balancing alignment is tricky. Over-alignment can stifle creativity—one model refused fictional violence, frustrating writers. Under-alignment risks misinformation. It’s a delicate dance, transforming the model into a trusted tool users can rely on.

Ready for the World: Deployment to Data Centers

With training and fine-tuning complete, the LLM is ready for its debut. Deployment moves it into production, often in data centers—sprawling facilities with servers, cooling systems, and high-speed networks. LLMs power real-time applications, like translation in Google Translate or customer service bots, handling millions of daily queries.

Deployment isn’t just copying files. Engineers optimize for inference—generating responses—using techniques like quantization to speed up performance. Models may be split across servers for scalability, with load balancers distributing traffic. Latency is critical: users expect instant replies, so data centers use hardware like NVIDIA’s A100 GPUs. Maintenance is ongoing—engineers update models to catch trends, like new memes, and fix bugs.

Costs are significant. Running a model in the cloud racks up bills, prompting tiered plans like SuperGrok for higher usage. Scalability and reliability remain challenges, but deployment brings LLMs to life, serving users worldwide.

Conclusion

Crafting an LLM is a monumental task, blending data, algorithms, compute, and human ingenuity. From scraping the web to sculpting transformers, powering massive training runs, aligning outputs, and deploying to data centers, each step pushes technology’s boundaries. The result is a tool that educates, entertains, and innovates—but also raises ethical questions about bias, privacy, and energy use.

LLMs are reshaping our world, powering assistants, code editors, and creative tools. What’s next? Might they narrate augmented reality tours or learn from your chats in real-time? The journey of these digital giants is a testament to human ambition. Try Grok for free on grok.com, debate AI ethics on X, or dive into this evolving field—your curiosity is the next step.

This text was generated with the help of LLM technology.